Database Music

A History, Technology, and Aesthetics of the Database in Music Composition

by

Federico Nicolás Cámara Halac

A dissertation submitted in partial fulfillment

of the requirements for the degree of

Doctor of Philosophy

Department of Music

New York University

May, 2019 Jaime Oliver La Rosa

© Federico Nicolás Cámara Halac

All Rights Reserved, 2019

For my mother and father, who have always taught me to never give up with my research, even during the most difficult times. Also to my advisor, Jaime Oliver La Rosa, without his help and continuous guidance, this would have never been possible. For Elizabeth Hoffman and Judy Klein, who always believed in me, and whose words and music I bring everywhere. Finally to Aye, whose love I cannot even begin to describe.

I would like to thank my advisor, Jaime Oliver La Rosa, for his role in inspiring this project, as well as his commitment to research, clarity, and academic rigor. I am also indebted to committee members Martin Daughtry and Elizabeth Hoffman, for their ongoing guidance and support even at the very early stages of this project, and William Brent and Robert Rowe, whose insightful, thought-provoking input made this dissertation come to fruition. I am also everlastingly grateful to Judy Klein, for always being available to listen and share her listening. As well as to Aye, for her endless support and her helping me maintain hope in developing this project. I would also like to thank my parents, Ana and Hector, who inspired and nurtured my interest in music from a young age, and my sister Flor and my brother Joaquin who were always with me, next to every word. Finally, this dissertation could not have been possible without the support and help of my friends, some of which I would like to mention by name because they affected directly certain aspects of this text. I would like to thank Matias Delgadino and Lucia Simonelli, for their continuous layers of abstraction; Matias Borg Oviedo, for those endless conversations; Ioannis Angelakis, for his glass sculptures.

(The initial title that explains how databases are everywhere) The name of the database. (Now think of the data, and the base, and how these relate) These words must point to two things placed one inside the other. (Is it base in data or is it data in base?) The base (of the data). A basement, a basis, a basic foundation for data. The house where data resides. (Is it the base or the data that is economical? Or both?) The addresses in which they are located. (Clearly, you are talking about pointers) The discretized space that guards data. (Guardians of space? This starts to look like a bad sci-fi thing…) Data as in the plurality of datum, and database as the plain (planicie in spanish) or the lattice upon which the address space is laid for data. (Data under house arrest) Datum as in bit, as the zero or the one, and nothing in between (Are you sure there is nothing in the middle?) Data as in bytes, and the eight bits that follow it around (like ducklings without mom [pata in spanish]) Data as in data types, the many names of the binary words representing the values of almost all numbers (and this ‘almost’ is still more than enough (Some would disagree)). Data as in data structures and their unions, symbols, lists, tables, arrays, sequences, dictionaries, simultaneously pointing to their interfaces and their implementations and assemblage (The assembly is in order) Data as in files fichiers in french, archivos in spanish, and their kilobytes and megabytes (inside directories and folders, etc.) Data as in data flow and data streams (are there data fountains?) Data you translate from slot to slot, transmit from client to server to client, transduce to and fro with adc and dac , transcode from format to format (transgress from torrent to torrent) Data as in dataset for your algorithms to test, to improve, to fit, to make them more efficient, to teach them the right tendencies, to drive your models data-driven (You are driving me crazy) Data as in data banks (also its transactions and currency) Data as in data corpus (Oh, so it has body?) Data as in database (finally), basing gigabytes with models meant for system management and warehouses, repositories, terabytes, their mining, and their subsequent data clouds, clusters, spacing out into the (in)famous big data leap from bit to big.

The aim of this dissertation is to outline a framework to discuss the aesthetic agency of the database in music composition. I place my dissertation in relation to existing scholarship, artists, and developers working in the fields of music composition, computer science, affect, and ontology, with emphasis on the ubiquity of databases and on the need to reflect on their practice, particularly in relation to databasing and music composition. There is a database everywhere, anytime, always already affecting our lives; it is an agent in our aesthetic and political lives just as much as we are agents in its composition and performance. Database music lives in between computers and sound. My argument is that in order to conceptualize the agency of the database in music composition, we need to trace the history of the practice, in both its technical and its artistic use, so as to find nodes of action that have an effect on the resulting aesthetics. Therefore, this dissertation is composed of two main sections.

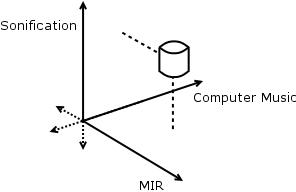

In the first part, I trace a history of database practices from three points of view. The first is from new media theory, emphasizing the intersection between the database and the body. The second is from the history of the database in computer science, giving a panoramic view of the tools and concepts behind database systems, models, structures. The third is from their use in sound practices, describing different approaches to databasing from the fields of music information retrieval, sonification, and computer music.

In the second part, I discuss the agency of the database under the broader concepts of sound, self, and community. These three axes are addressed in four sections, each with a different perspective. First I focus on listening and present the database as a resonant subject in a networked relation and community with the human. Second, I focus on memory, comparing human memory and writing with digital information storing, thus relating databasing and composition with memory, archives, and their spectrality. Third, I analyze the performativity of databasing, understanding the database as gendered, in its temporality, repetition, and in its contingent appearance as style, skin, and timbre. In the last section, I revise the notion of music work, reflecting on the consequences of the anarchic and the inoperative in the community of database music.

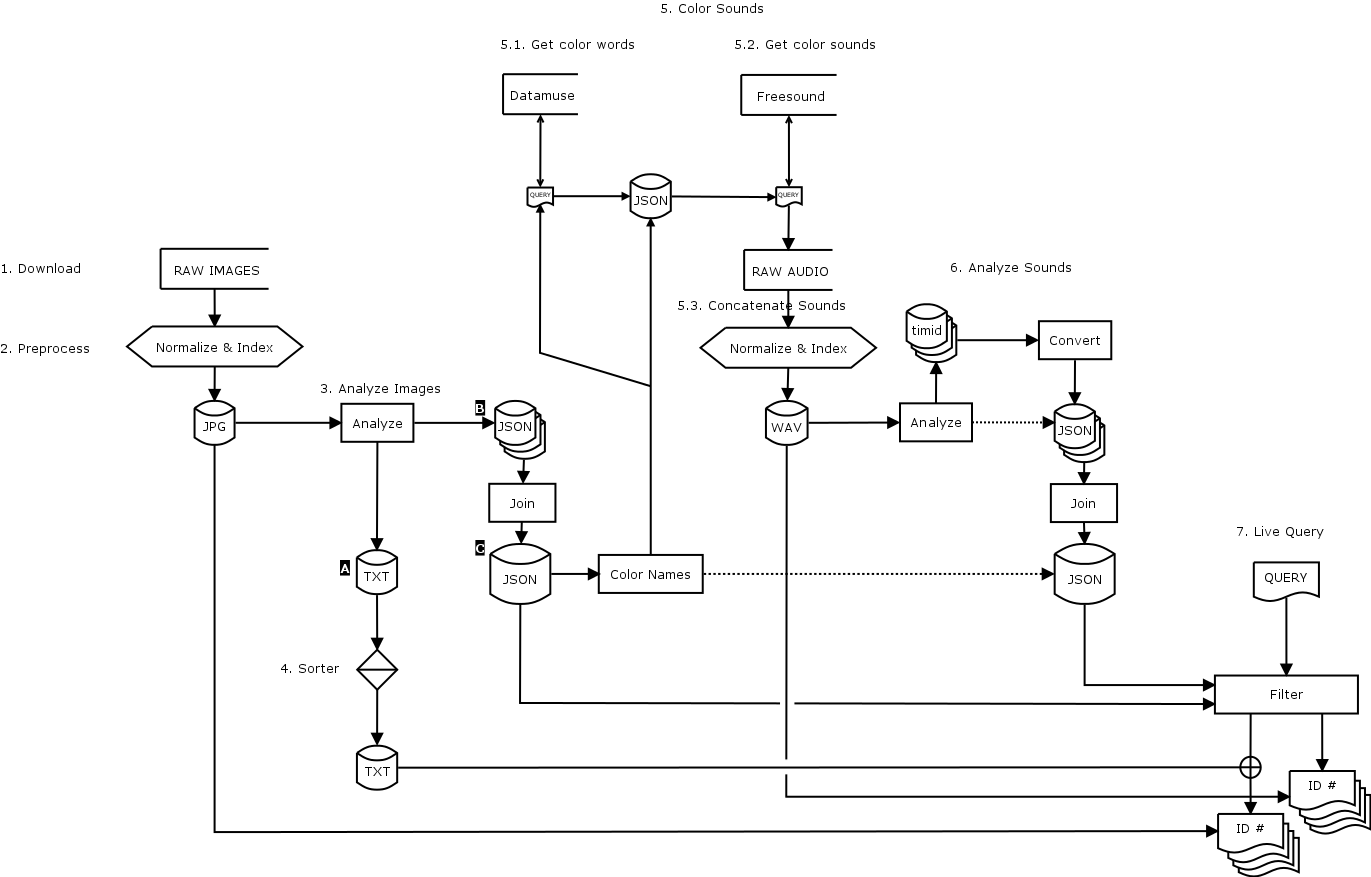

As an appendix, I present an open-source library for making and performing a multimodal database that combines computer vision and timbre analysis algorithms to generate a database of image and audio descriptors suitable for automated navigation, querying, and live audio-visual performance.

This dissertation begins with a word, database, and a proposition: Is there something we can call database music? This sudden jump from noun to adjective comes not without its audible retaliation, and I make no attempt to muffle it. The reader would perhaps find it useful to know that my condition of composer has guided my research, and made me jump too quickly at the opportunity to make some sound in this initial gesture. Nevertheless, there is a sound and we can listen to it.

In the literature that I have mined (for this dissertation is not only a text, but the sedimented layers of text that I initially traversed with keywords in database queries, such as “database AND music”), the database has many histories and many names attached to it. I make no attempt to cover all of these, but I admit that I have (foolishly) tried: the subtitle does begin “a history….” However, this is ‘a’ history and not ‘the’ history and thus there are gaps and missing parts to which this text is inevitably bound. It is also the ‘a’ that accounts for the path that I begin delineating in the digital house (or database) that is this dissertation.

The two nodes that compose the focus of its text, database and music, have each their own historical, technical, and aesthetic idiosyncrasies. The primary goal of this dissertation (if I dare to say that it has one) is thus to find where database and music intersect. The ‘AND’ in our query had indeed much less results than the OR, which meant my quest was already promising some reduction. For instance, I decided that the search would only pertain to situations in which computers were involved (“with, perhaps, the exception of…” 1.1). Needless to say, not only was my search dramatically expanded through the plethora of database applications, the history of their systematization, and the ongoing struggle between models, it also opened up the programming world, and with it the history of programming languages, and of the computer itself. Despite this broadening of the field, the conjugation ‘database music’ narrowed down to computer based music practices, leaving out music made without (and also music made before) the emergence of computers.

Upon this abysmal enterprise, I did as any musician would and started listening for the sound of databases. I realized that this is not just the sound of your computer reading from its hard-drive: it is the sound made with its software. At this point, a network (and this is one of the key terms throughout this text) of sonic software had begun to appear. What this network pointed to, besides the key to open door number one, was a certain silence that much of the literature relating to databases in art continues to abide to: a sonic silence (a silence regarding sonic practices). This relates to the “history, technology…” part of the subtitle. In addressing this silence, I do not attempt to invalidate previous approaches to theorizing database art (Manovich 2001, Vesna 2007). On the contrary, these texts have shed light on the key concepts with which I have traversed the sonic software I present, the types of programming decisions I discuss, the various disciplines I place at the intersection of computers and music, and the plurality of shapes that have appeared in relation to databases: door number two. Through these authors, I introduce notions of embodiment, virtuality, and framing drawn from posthumanism (Hayles 1999) and new media theory (Hansen 2004), in order to contextualize the role of the database within the practices of MIR , Sonification, and Computer Music. Each one of these disciplines has its own history and it is evidenced by the many conference proceedings and journals that I have also mined, as well as the various authors (most of them composers, and most of them programmers) that I refer to. In between these two, that is, in between my exploration of the database in new media theory, and its corresponding exploration within sound practices, I introduce the more technical evolution of the database, in order to develop a secondary concept that speaks of the performativity of databases: databasing. I use this non-existing gerund to refer to all the actions that need to take place around databases, whether these are made by humans or not, and referring to these users as databasers.

After having reached this point, in which the intersection of the database and music was covered in terms of its facticity, as the evidence of a motion, the trace of the database, I could not help but noticing door number three. I attempted to connect database practices to theories of listening, memory, and networks outline a framework for discussing the aesthetic agency of database music. I began listening to a certain sound of networks, a certain resonance. This door refers to the next part of the subtitle “…aesthetics of the database…” and opened up to the complex world of sound, flipping this text horizontally: music AND database. (Bang!) The meaninglessness of this reversal, at least semantically or even in terms of a database query, became precisely the point. The sound of databases that I delineate through the first two doors now reached a point where it faced a difference. I enter first with Nancy (2007)’s ((2007)) ontology of sound, with which I understand the networks of music software as resonant networks. With this move, the database sounds as an actor (Latour 1990) that reconfigures the way we think communities (Nancy 1991). The implications of this association led me to distinguish databases, memory, and archives, and find within their connections a spectral form of authority (Derrida 1978, Derrida & Prenowitz 1995). The final move extends towards the activity of the (human) body, and the relationship between database, gender, and performance (Butler 1988). These three nodes (resonance, spectrality, and performance) encompass an aesthetics of the database music that I plan only to suggest throughout this text, but that I will leave, to a certain extent, unexplained.1

Up to this point, my argument might seem to have arrived to an end: I contextualize and define database practices (chapter 1); I develop a technical overview of the performativity of the database into what I call databasing (chapter 2); I review the existing literature with emphasis on sound practices (chapter 3); I conceptualize sound in terms of resonance, networks, and community (chapter 4); I delineate the differences between database, memory, and archive, in order to present the spectrality of databases (chapter 5); I develop databasing in terms of performance, gender, and style in relation to databases (chapter 6). However, there is yet one more leap that the more adventurous reader might take with the final chapter of this dissertation. In chapter 7, I bring the discussion of database and music to the latter part of the subtitle “in music composition.” With this chapter (a fourth door) I engage with the work of music composition. That is to say, with the history of the database in mind, I rethink the activity of composing (Vaggione 2001), the role of the composer (Lewis 1999), and the operativity of the music work (Cascone 2000). The reader will be warned that this last chapter has no conclusions, let alone answers. Neither has it proper questions. It can be held as an attempt to incite, if anything, a provocation before the question.

I have already warned the reader about the aesthetic impulse of a composer writing a dissertation, but that should not discourage neither academic rigor, nor literary thirst. I have thus included an exhaustive bibliography, interludes, a transcription, and a postlude (work in progress), together with graphs, tables, a list of acronyms, a glossary, and some snippets of pseudocode and sometimes working code. In the hope that the content of this dissertation will open the discussion about the role of databases beyond their technical implementation and into their roles in musical aesthetics; and in the hope that you continue reading these p{age,ixel}s, I will (simply) end these introductory remarks.2

The world appears to us as an endless and unstructured collection of images, texts, and other data records, it is only appropriate that we will be moved to model it as a database —but it is also appropriate that we would want to develop the poetics, aesthetics, and ethics of this database. (Manovich 2001, p. 219)

To point to the origin of the database as it is known today is not an easy task. Certainly, databases are closely related to the history of computers, but they also relate to the history of lists. The common link between these two is the fact that they are written —on a memory-card, on a page—, which would take its history to the origins of the written word…. However, there is a point where the history of storage takes an operational turn. At this point, the ‘word’ becomes a type of data, and data begins to bloom exponentially, impulsing faster and more efficient storage and retrieval technologies. Database systems were modelled hand-in-hand with computer languages and architectures from the late 1950s until the present day, when they continue to be developed for almost all aspects of the business world.

In the artworld of the 1990s, the increasing availability of personal desktop computers —with software suites, programming languages, and compilers— resulted in the emergence of new media art. Lev Manovich (Manovich 2001) was the first media historian to argue that the database became the center of the creative process in the computer age. The database had become the content and the form of the artwork in . Furthermore, Manovich recognized that the artwork itself had become an interface to a database; an interface whose variability allowed the same content to appear in individualized narratives. Thus, he claimed that narrative and meaning in new media art had been reconfigured differently. Narrative became the trajectory through the database (Manovich 2001, p. 227), and meaning became tethered to the internal arrangement of data.3 Therefore, for Manovich, the “ontology of the world as seen by a computer” (Manovich 2001, p. 223) was the symbiotic relationship between algorithms and data structures. As a consequence of the use of databases in art, the architecture of the computer was transferred to culture at large (Manovich 2001, p. 235). Manovich’s ‘database as symbolic form’ thus became a technologically determined shadow that haunted much of new media.

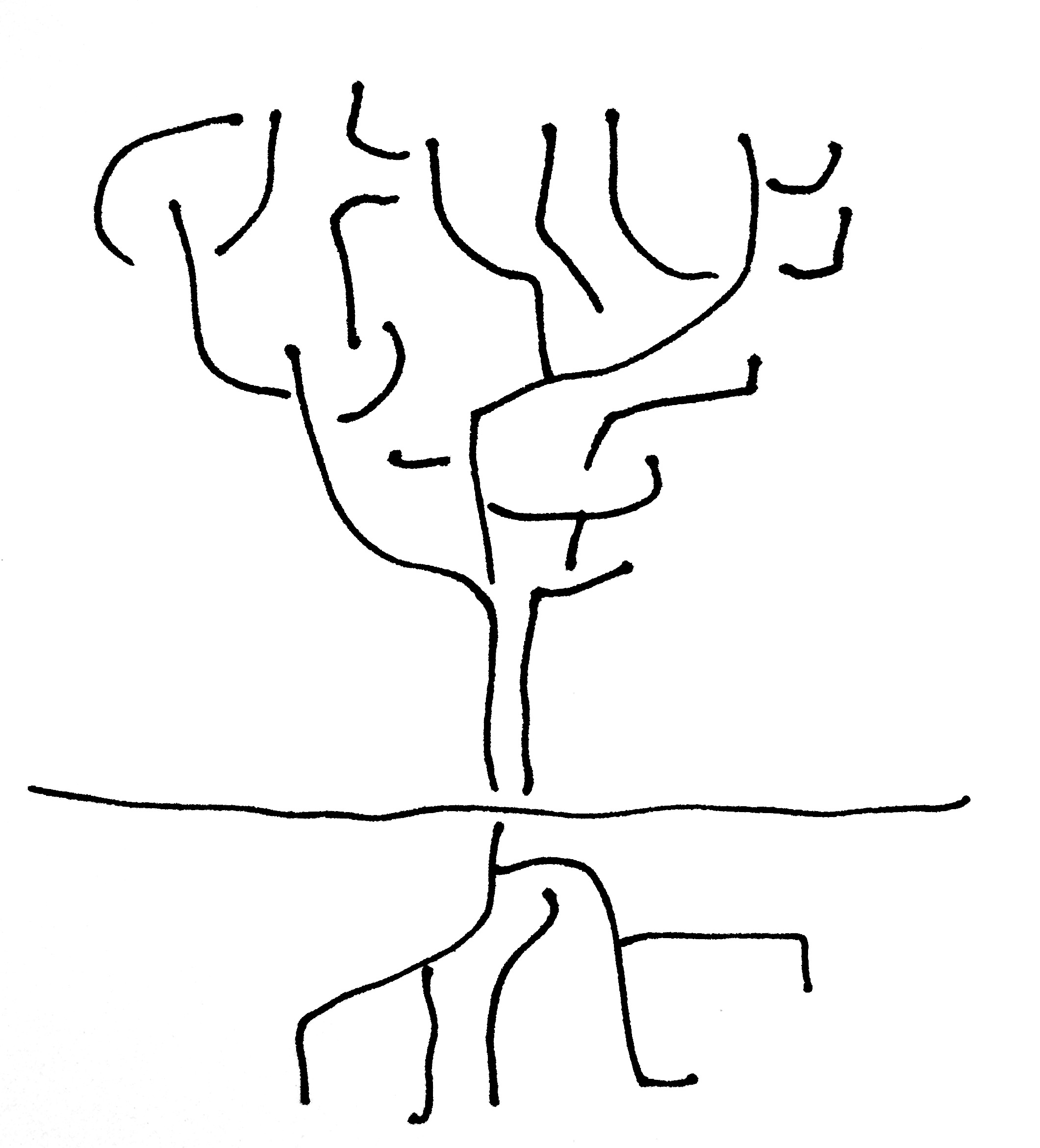

In order to reveal the extent to which the presence of the database has a radical effect on narrative, however, Manovich reverses the semiotic theory of syntagm and paradigm that governed much of the 20th century (Manovich 2001, p. 231). Manovich describes the paradigm as a relation subjected to substitution, and the syntagm as a relation subjected to combination. For example, from the entire set of words in a language (the paradigm) a speaker constructs a speech (the syntagm): the paradigm is implicit (absent) and the syntagm is explicit (present). The relation between these two planes (of the paradigmatic and the syntagmatic) is established by the dependence of the latter on the former: “the two planes are linked in such a way that the syntagm cannot ‘progress’ except by calling successively on new units taken from the associative plane [i.e., the paradigm]” (Barthes et al. 1968, p. 59). Barthes gave several examples with different ‘systems,’ one of which was the food system. Put simply, the dish (as a set of choices with which to make a dish) is the paradigm and what you are eating is the syntagm (Barthes et al. 1968, p. 63). However, when one looks at the restaurant’s ‘menu’, one can glance at both planes simultaneously: “[the menu] actualizes both planes: the horizontal reading of the entrées, for instance, corresponds to the [paradigm], the vertical reading of the menu corresponds to the syntagm” (Barthes et al. 1968, p. 63).

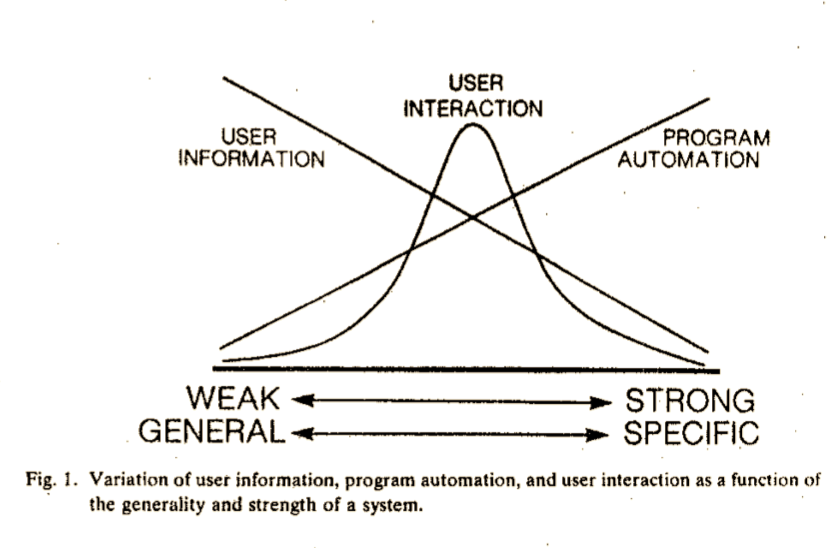

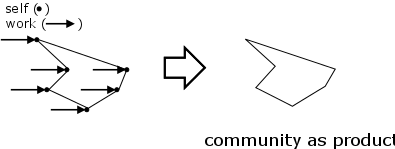

A software menu would come to represent both planes as well: the paradigm is the set of all possible actions the user might make within the specific context of the menu; the syntagm is the actual sequence of clicks that the user makes. Manovich points to a reversal of these planes (See Figure 1.1). Given the concrete presence of the database (the options on the software menu), and given the hyperlinked quality of the user interface (those options are clickable links), the database becomes explicit (present) and the sequence of clicks becomes implicit (absent, ‘dematerialised’). Since Barthes’ project was focused mostly on the distinction between speech (as syntagm) and language (as paradigm), this is how the database was first understood in art. On the one hand, narrative (speech) is the syntagm: it is the trajectory through the navigational space of a database, and since this narrative is abstracted, its concreteness comes achieved by the interface, and interface and narrative depend on each other. The result is an interlocking of narrative and interface that results on the conception of the interface-as-artwork. On the other hand, the database is the paradigm (language), since it represents the set of elements to be selected by the user.

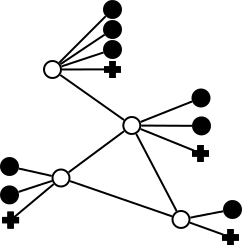

Top: syntagm, paradigm, and their relation. Bottom: narrative, database, and their reversed relation.

For example, consider the case of the typical timeline-view of a video editor.4 Normally, the user creates a session and imports files to working memory, creating a database of files —video files, in this case. Once this database is in working memory, the user places on a timeline the videos, cutting, and processing them at will, until an export or a render is made.5 The timeline where the user places the videos is a visualization of the “set of links” to those files on disk, and not their actual data. Such a timeline is an editable graph that allows the user to place in time the pointers to the elements on the database. This is what Manovich means by “a set of links,” because the user is not handling the files themselves —as would be the case with an analog video editor, where the user cuts and pastes the magnetic tape—, but the extremely abstract concept of memory pointers.

I consider this reversal to be valid as a shift from one-to-many to many-to-one (See Figure 1.1). The question of the materiality of the database and of the pointers depends on the materiality of data. Links or pointers have, for Manovich, a different (absent-like) status in relation to stored memory itself. This is because of a distinction between pointers and data on the basis of their use: pointers are of a different nature since they do not store data directly. Instead, they refer to the address in memory where a specific stored data begins. However, the mutual binary condition of pointers and data, and the fact that they are both stored in the same memory, reveal Manovich’s reversal to be somewhat misleading. Pointers are just another data type, however functionally different they may be. If one understands them as moving bodies, it follows that pointers are ‘lighter’ and travel much faster than other data types, which are ‘heavier’ and slower to move. However, data types are not moving bodies at all, and thinking of them as such interlocks us in a semiotic trap: accepting this reversal means accepting a certain materiality of data, which is different from a certain materiality of information.

A mere ‘byproduct’ of pleasure, entertainment is a hangover from the media epoch: a function that caters to our (soon to become obsolescent) need for imaginary materialization through technology [which, in turn,] serves as a diversion to keep us ignorant of the operative level at which information, and hence reality, is programmed. [emphasis added] (Hansen 2002, p. 59)

I find in Manovich a silent allegiance to german media theorist Friedrich Kittler’s concept of digital convergence. Digital convergence entails that the bodily resonance of media becomes obsolete in the face of absolute digital information storage. Thusly, it turns the human into a “dependent variable” (Hansen 2002, p. 59). In the case of physical media, the human body was, for Kittler, directly shaped by media, and the limit of this ‘shaping’ was set by the bodily limits of perception. The body became a by-product of media. However, in the age of digital convergence, of an “absolute system of information” (Hansen 2002, p. 63), media remove this bodily limit of perception, making the human body a residual product. The body, then, becomes a residue of digital industries.

For example, the extents of this residual aspect of the human can be seen in writer Norman Klein’s considerations of the author (Klein 2007). Following Manovich’s interface-as-artwork, Klein argues that since the reader gets immersed in data, “[she] evolves pleasantly into the author” (Klein 2007, p. 93). Because the reader participates in the narrative, the result is a reconfigured concept of shared authorship. However, Klein continutes “instead of an ending, the reader imagines herself about to start writing” (Klein 2007, p. 93). This surprising twist in Klein’s consideration adds another layer of complexity, namely, the categorical difference between ‘writing’ and ‘not-yet-writing.’ In Klein’s sense, narrative constitutes a promise of authority that equally blurs the roles of the writer and of the reader. Most importantly, this blurred authority is seen as a reflection of control and subordination of the human. In this view, the potentiality of authority arising from the trajectory through the database belongs neither to the reader nor to the writer: it is appropriated by the database. The roles of the reader and the writer fade into each other and vanish, allowing the database to be a dominant middle term. In other words, human agency is absorbed into a shadow, making the database the sole agent to which the human is subjected. In Klein’s own words, the human is a ‘slave’ to data, and as a consequence the human is economically ‘colonized’ and ‘psychologically invaded’ by the evolving force of computers, information, or technology in general (Klein 2007, pp. 86–88). Authority converges, too, in the age of digital convergence.

Media theorist Mark Poster defines technological determinism as the “anxiety at the possibility of [the human mind’s] diminution should these external [technological] objects rise up and threaten it” (Poster 2011 X). In other words, the fear or anxiety that the human is ultimately subjected to the power of technology. Understanding new media as digital convergence leads to reading the ‘new’ in new media as the ‘digital.’ In reaction to the anxieties that this convergence brings, and from an embodied approach where databases have an aesthetic agency in resonance with the human, in what follows I propose to shift the focus from narrative (interface) to performance (databasing), and to reconfigure the shadow of the database as a hybrid skin exposing the human and the non.

The disembodiment of information was not inevitable, any more than it is inevitable we continue to accept the idea that we are essentially informational patterns. (Hayles 1999, p. 22)

Media theorist N. Katherine Hayles (Hayles 1999) unearths the theoretical context of cybernetics, upon which the posthuman has been constructed throughout the 20th century. She identifies three waves of cybernetics, each governed by different concepts which helped build the undergirding structures of the technologically determined and disembodied literature in vogue in the 1990s.

The foundational wave cybernetics (from 1945 to 1960) was built, among other concepts, on two main theories: Jon von Neumann’s architecture of the digital computer (See 2.1) and Claude Shannon’s theory of information. As “a probability function with no dimensions, no materiality, and no necessary connection with meaning” (Hayles 1999, p. 18), Shannon’s formal definition of information within communication systems highlighted pattern over randomness (Hayles 1999, p. 33). Therefore, disembodied information became a signal to be encoded, decoded, and isolated from noise.

The word ‘cybernetics’ [steersman] thus synthesized three central aspects: information, communication, and control. Since the human was seen as an information processing entity, it was “essentially similar to intelligent machines” (Hayles 1999, p. 7). Therefore, the conceptualization of the feedback loop as a flow of information came to put at ease notions of human subordination, thus arriving at the governing concept of first wave cybernetics: homeostasis. In this sense, the “ability of living organisms to maintain steady states when they are buffeted by fickle environments” (Hayles 1999, p. 8), became a patch that simultaneously fixed computers as less-than-human, but also pointed to the anxiety of disembodied information that was growing underneath.

However, since the observer of the ‘feedback loop’ became part of the flow of the system, in the second wave (from 1960 to 1980), cybernetitians reconfigured homeostasis into reflexivity, that is, “the movement whereby that which has been used to generate a system [becomes] part of the system it generates” (Hayles 1999, p. 8). This became also known as autopoiesis (i.e., self-generation), based on writings by Humberto Maturana and Francisco Varela. This second wave leaves the feedback loop behind, since it considers that “systems are informationally closed” (Hayles 1999, p. 10). This means that elements in the system do not see beyond their limits, and the only relation to the ‘outside’ environment is by the concept of a trigger. In this sense, disembodied information was buried deeply into the organization of the system, and the system itself appeared in the form of a cyborg.

Shifting from triggers to artificial intelligence signaled the third wave of cybernetics (from 1980 onwards), whose central concept was virtuality. Development of cellular automata, genetic algorithms, and principally, emergence, led to the formation of the posthuman, or an embodied virtuality. However, in Hayles view, the underlying premise of this ‘posthuman’ is that the human can be articulated by means of intelligent machines (Hayles 1999, pp. 17–18). In turn, reconfiguring the concepts of body, consciousness, and technology as inherent to (post-) human life, Hayles argues for the impossibility of artificial intelligence to serve as a proxy for the human. Hayles objective is, then, to dismantle cybernetics from its (relative) assumptions, questioning its major achievements over the years and thereby opening the field for new considerations of the body and its material environment within cybernetics, and by extension, of the body in new media:

My dream is a version of the posthuman that embraces the possibilities of information technologies without being seduced by fantasies of unlimited power and disembodied immortality, that recognizes and celebrates finitude as a condition of human being, and that understands human life is embedded in a material world of great complexity, one on which we depend for our continued survival. (Hayles 1999, p. 5)

While her work is focused on the literary narratives that were built in parallel with cybernetics, she leaves incursions in new media theory for other media theorists. This is where Mark B. N. Hansen comes in.

As I describe above, Manovich arrives at this notion of the interface-as-artwork by opposing database and narrative on the semiotic grounds of the reversal of the paradigm and syntagm. In turn, media theorist Mark B. N. Hansen (Hansen 2004) notes that the interface-as-artwork constitutes a disembodied “image-interface” to information in which the process of information itself (in-formation; giving form) is overlooked. Hansen locates the source of this disembodied conception in Manovich’s implicit —but nonetheless evident— premise of the overarching dominance of cinema in contemporary culture, which results in a “disturbing linearity [with] hints of technical determinism” (Hansen 2004, p. 36).

For example, Manovich argues that standardization processes originating from the Industrial Revolution have shaped how cinema is produced and received. Attuned to the perceptual limits of the body, the standardization of resolution can be seen (image dimensions, frames per second, and aspect ratio) and heard (audio bit depth, sampling rate, and number of channels). In this sense, the moviegoer and by extension, the listener became industrial by-products, determined by the massively produced electronic devices used for recording and playing. As I have described with Kittler’s technological determinism, the devices driven by industrial forces, therefore shaped the body, and as an extension, the aesthetics of cinema.

For Manovich, due to the internal role of the database, the logic of new media is no longer that of the factory but that of the interface. Through the interface to a database, the user is given access to multiplicities of narrative, and thusly, to endless information. The user is granted the power of the database, making in Manovich’s eyes the database an icon of postmodern art. In other words, on an aesthetic level, while mass-standardization and reproducibility of media —the “logic of the factory” (Manovich 2001, p. 30)— shaped the form of cinema, post-industrial society and its logic of individual customization, shaped the database form. At the bodily level, cinema standardized perception of the passive body, and database individualizes experience. However, this individualized experience still constitutes a technological ‘shaping’ of the body, a shaping that is exploded into every user quietly sitting behind the screen.

In opposition to this passivity of the body, Hansen describes images as something that emerges out of the complex relationship between the body and some sort of sensory stimulus. In radical disagreement with Manovich, Hansen considers that the image has become a process which gives form to information, and that this process needs to be understood in terms of the body as a filtering and creative agent in its construction. Drawing from Henri Bergson’s theory of perception, and in resonance with cognitive science, Hansen defines the function of the body as a filtering apparatus. Under this conception, the body acts on and creates images by subtracting “from the universe of images” (Hansen 2004, p. 3). Image creation is world creation, and it is not necessarily in contact with the reality that surrounds the body (or the reality of the body), but it is a result of the embodiment of a virtuality that is inherent to our senses. In other words, through this filtering activity, the body is empowered with “strongly creative capacities” (Hansen 2004, p. 4). The world is a virtuality that is constructed with our senses and our body. The world can only appear if it appears to the body. Therefore, instead of being a passive node, the body actively in-forms data as information (Hansen’s word play). The databaser (database user) makes information out of data by precisely embodying the performative act that I call databasing.

The activity in the receiver’s internal structure generates symbolic structures that serve to frame stimuli and thus to in-form information: this activity converts regularities in the flux of stimuli into patterns of information. (Hansen 2002, p. 76)

The activity of framing, according to Hansen, must be differentiated from that of observation. In this way, “information remains meaningless in the absence of a (human) framer,” (Hansen 2002, p. 77) and framing becomes a resonance of the (bodily) singularity of the receiver. Quoting MacKay’s Information, Mechanism, Meaning (1969), the meaning of a message

…can be fully represented only in terms of the full basic-symbol complex defined by all the elementary responses evoked. These may include visceral responses and hormonal secretions and what have you…an organism probably includes in its elementary conceptual alphabet (its catalogue of basic symbols) all the elementary internal acts of response to the environment which have acquired a sufficiently high probabilistic status, and not merely those for which verbal projections have been found. (Hansen 2002, p. 78)

It is with this conception of framing that Hansen describes precisely that information always requires a frame:

…this framing function is ultimately correlated with the meaning-constituting and actualizing capacity of (human) embodiment…the digital image, precisely because it explodes the (cinematic) frame, can be said to expose the dependence of this frame (and all other media-supported or technically embodied frames) on the framing activity of the human organism. (Hansen 2002, pp. 89–90)

Therefore, in the context of Kittler’s digital convergence, framing prevents the human from being rendered a dependent variable. On the contrary, the framing function of the human body allows the digital to become information. The frame, as Hansen describes, is the human body filtering images from the world, and creating a virtual image that gives form to data. The frame needs to happen as a relation, and thus, it is the temporal instantiation of a process. What would a human body without this framing and filtering capability look like? How would this temporality of the process of information be understood?

In the following interlude I take the concept of an absolute (human) memory to be at an intersection between disembodied theories of information and, precisely, the concept of an embodied memory. The aim is to introduce and differentiate between human and nonhuman in terms of memory and databases. The wonder, admiration, but also the fear and mystery that a notion of embodied memory awakens can speak for the uncanny feeling that occurs whenever databases are involved, and thus can speak for a certain agency of the database. I understand this feeling as what accounts for the aesthetic experience of database music.

I suspect, nevertheless, that he was not very capable of thought. To think is to forget differences…(Borges 1942, p. 2)

The importance of memory —and forgetfulness— can be represented by Jorge Luis Borges’s famous 1942 short story, Funes, the memorious (Borges 1942). Due to an unfortunate accident, the young Irineo Funes was —“blessed or cursed” as Hayles points out (Hayles 1993, p. 156)— with an ability to “remember every sensation and thought in all its particularity and uniqueness ” (Hayles 1993). A blessing, since a capacity to remember with great detail is certainly a virtue and a useful resource for life in general; a curse, because he was unable to forget and, as a consequence, he was unable to think, to dream, to imagine. Throughout the years, he became condemned to absolute memory, and so to its consequence, insomnia:6 he was secluded in a dark and enclosed space so as not to perceive the world. Hayles focuses on one aspect of the story, namely, the fact that Funes invented —and begun performing— the infinite task of naming all integers, that is, of giving a unique name —and sometimes, last name— to each number without any sequential reference. According to how Hayles describes it, by carrying out his number scheme, Funes epitomizes the impossibilities that disembodiment brings forth. As Hayles writes, “if embodiment could be articulated separately from the body …it would be like Funes’s numbers, a froth of discrete utterances registering the continuous and infinite play of difference” [emphasis added] (Hayles 1993, pp. 156–59). The point that Hayles touches upon can be seen as the limits and fragility of embodied memory, as well as the need to forget, in opposition to an embodiment ‘outside’ the body (disembodiment), that would require no need to forget. We will see how the difference between forgetting and erasing relates to the database. In that Manovichian world which “appears to us as an endless and unstructured collection of images, texts, and other data records” (Manovich 2001, p. 219), this idea would be perfectly viable. Indeed, data banks have already been growing exponentially much in the same way as Funes’ memory. This capability of accumulation without the need for erasure is enabled by the database structure inherent in computers. However, the distinction that Hayles presents is crucial: data is not information because information needs to be embodied, and with that embodiment comes the need to forget. In sum, on one hand, a disembodied data bank can have all the uniqueness and difference that is available by the sum of all cloud computing and storage to date; however, on the other hand, embodied memory is available by the human capacity to forget.

Matías Borg Oviedo (Oviedo 2019) relates this incapacity for thought to the negation of narrativity itself, thus finding in the image of Funes a hyperbole for contemporary subjectivity (Oviedo 2019, p. 5), where there is no room for narration, only data accumulation. In this sense, narrativity can be seen as that which resides in the threshold between knowledge and storage. I believe this distinction stems precisely from the difference between information and data. The process of information, of giving form, requires a certain temporality that is not that of the perceptually immediate and extremely operative zero-time of the CPU . Within the zero-time of computer operations (within a millisecond) there simply is no time for narration, only for addition or increment. Narrative is temporal —happening as a historical process— and algorithms are atemporal —operating in an constant now. Since neither data structures nor algorithms operate outside the confines of the millisecond, they can’t spare time to think and, likewise, they can’t forget to count. Counting is all they do, so they cannot tell stories: the difference in the same spanish word contar números [to count numbers] and contar historias [to tell stories]; or, “if a German pun may be allowed: zählen (counting) instead of erzählen (narrating)” Ernst (2013, p. 128). Therefore, Funes’ accumulative memory represents the overflow of the now, the totally blinding transparency of the world, and an absolute memory that precludes narration. Because he accumulates data of the world in its totality, he does not have time to think: “to think is to forget differences” reads the quote above. The only one in Borges’ story who actually thinks is the narrator, to which we can add: Funes could not have told this story himself in first person narrative.7 Thus, we can ask ourselves if this hyperbolic ‘light’ of the Funesian ‘absolute memory’ is not a premonitory figure of the database.8 Because of this antithetical condition between databases and narrative, Manovich proposed that the database became a form on its own, in opposition to narration. To the extent that ‘database form’ as a category that identifies art using databases, this can be considered accurate. However, considering this distinction between embodied and disembodied memory that resides in the ability to narrate, databases are inherently deprived of narration. Making art with databases is making them dance (see below). Therefore, to what extent is ‘database music’ in itself a contradiction if we consider ‘music’ to be a form of writing? I will leave this discussion for a later chapter (See 1).

This Funesian database can also be understood in relation to what Gayatri Chakravorty Spivak (Derrida & Spivak 1976) writes about forgetfulness. She notes in Nietzsche the ‘joyful’ and ‘affirmative’ activity that constitutes forgetfulness as being twofold. On the one hand, this activity is a “limitation that protects the human being from the blinding light of an absolute historical memory,” and on the other, it is “to avoid falling into the trap of ‘historical knowledge’” (Derrida & Spivak 1976 xxxi). The ‘historical’ here is an “unquestioned villain,” which takes two forms: one “academic and preservative,” the other “philosophical and destructive” (Derrida & Spivak 1976 xxxi). For Nietzsche, as Spivak notes, forgetfulness is a choice that comes as a solution: an “antidote” to the “historical fever,” or the “unhistorical,” that is, “the power, the art of forgetting…” (Derrida & Spivak 1976 xxxi). I propose an imaginary experiment that would add some noise to Borges’ short story, however utterly fantastic his writing was. One thing that can be read from the story is that, in order to seclude himself from perceiving the world, or better, in order to forget the world altogether, Irineo stayed in the darkness of his room. This is how he cancelled light, a quite powerful stimulus if memory-space is to be optimized for the purpose of, say, getting some sleep. However, there is little to no mention of the sonic environment in which Funes was embedded —somewhere in the outskirts of the quiet Uruguayan city of Fray Bentos. In fact, the only sonic references are focused on the narrator’s perspective, referring to Funes’ high-pitched and —due to his being in the darkness— acousmatic voice. To a certain extent, we might think of Funes’ high-pitched (at least this is how the narrator heard it) voice as a hint to the highlighted overtones that link Borges’ “long metaphor of insomnia” with “the ‘laughter’ of [Nietzsche’s] Over-man [that] will not be a ‘memorial or…guard of the…form of the house and the truth…He will dance, outside of the house, this…active forgetfulness’’ (Derrida & Spivak 1976 xxxii). Nonetheless, Funes is deprived of this forgetfulness, and thus cannot go outside, let alone laugh or dance.9 By locking himself inside a room he would have managed to attenuate sound waves coming in from outside. Notwithstanding his isolation —a house arrest—, sound waves are actually very difficult to cancel.

( It might be useful to compare Funes’ attempt to filter out the world with John Cage’s quest for silence. An interesting experiment would have been to have John Cage take Irineo to an anechoic chamber and ask him what he can remember then. From Cage’s own experience, we can guess that Funes would effectively remember his own sounding body. Kim Cascone (2000) writes that “[Cage’s] experience in an anechoic chamber at Harvard University prior to composing 4’33” shattered the belief that silence was obtainable and revealed that the state of ‘nothing’ was a condition filled with everything we filtered out” (Cascone 2000, p. 14). Imagine an 80 year-old Irineo in David Tudor’s premiere at Maverick Concert Hall in Woodstock, NY, infinitely listening to 4’33” )

It is very unlikely —but nonetheless possible— that Borges was aware of American acoustician Leo Beranek’s research for the US Army during World War II, that is, when the first anechoic chamber was built.10 Furthermore, even if he managed to isolate himself completely from the world by cancelling perception altogether, Funes would have been with his memories (he was not deprived of anamnesis, the ability to remember), which were not discrete, but continuous iterations of the world he had accumulated over the years. What this means is that all the sounds he had listened to would be available to his imagination. As far as we can learn from the narrator, while smell is referenced to in the story, sound was nonetheless out of Funes’ concerns. Therefore, one thing we can ask ourselves is how the world would sound for Irineo Funes? The task is not difficult to imagine: the world would be inscribed in poor Irineo’s memory in such an infinitely continuous way that each fraction of wave oscillation would be different, unique, leaving no space for repetition of any kind. For example, one of Irineo’s concerns was to reduce the amount of memories on a single day, which he downsized to about seventy thousand…. What would be Funes’ sample rate? What frequencies could he be able to synthesize? All sounds (and all that can be registered) would be listened to completely, with every infinitesimal oscillation of a wave pointing to the most utterly complete scope of imaginable references. A complete state of listening. In fact, we might not be able to call it listening any more. Not even signal processing. An infinitesimal incorporation of sound is unthinkable. Within such total listening there would be no possibility for thought, no processing of any kind, and no synthesis: only infinite accumulation and storage. On the one hand, no matter how accurate our embodied listening might be, we are bound to miss some motion, some waves would pass through us and we would be busy forgetting to register them. On the other hand, if such a recording were humanly possible, thinking would cease to be so. This can be thought of as the intersection of the finite with the infinite: while Funes’ nonhuman memory corresponds to a dynamics of the infinite, his human body is quite human. Funes is not deprived of this finitude, and existed (fantastically) on a ghostly liminality. This liminality grows more and more evidently throughout the story, hand in hand with the cumulative growth of the Funesian database, all the way until the end, in a sort of Moore’s Law of data congestion, saturating completely in an utterly human pulmonary congestion (Oviedo 2019).

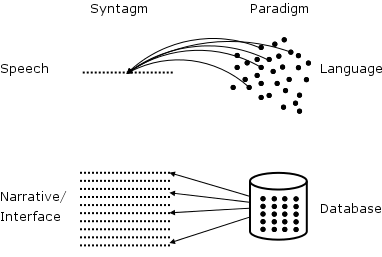

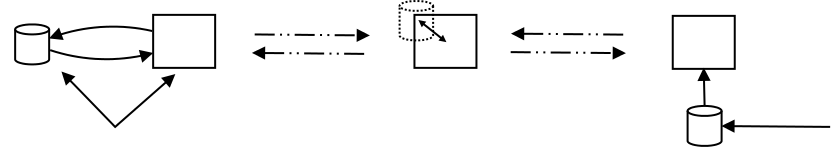

Despite Manovich’s technologically determined considerations of the database as form, he notes a fundamental aspect of the use of the database when he expresses that data need to be collected, generated, organized, created, etc: “Texts need to be written, photographs need to be taken, video and audio need to be recorded. Or they need to be digitized [and then] cleaned up, organized, and indexed” (Manovich 2001, p. 224). In this sense, he begins to describe the actions that need to be performed around data, what I call databasing (See 2.1), which connotes the use of databases in terms of their performativity (See 2.1 and 3). He even goes further and proposes that this activity has become a “new cultural algorithm,” (Manovich 2001, p. 225) (See Figure 1.2).

The world is mediatized, stored in some media (film, tape), then digitized into data, then structured into a database. The result is the world represented by the database.

While Manovich calls for an “info-aesthetics” (Manovich 2001, p. 217), as well as a poetics, and ethics of the database, neither Manovich nor the following generation of media artists and theorists could carry out an exhaustive account of an aesthetics of the database. Several authors continue to abide by Manovich’s claim that the aesthetics of the database, or the database as form, is a symptom of the uncritical use of database logic throughout the visual art world of the 1990s. It is in hindsight that his argument can be understood as grounded on the same disembodied constructions that prevent him from including human agency in his account. In any case, his contribution to the literature on the role of databases in new media have led us to this point of inflexion, in which we can consider different points of view regarding the topic of databases. My revision of this algorithm will come later in this dissertation (See Figure 1.2). In what follows, I will explore the more technical aspects of databasing, in order to trace a connection between the literature on sound-based computer practices with that of the development of databases over the years. In this way, I bring the discussion of databases into the sonic sphere.

The first step in working with a database is the collection and assembly of the data…. Sorting determines the sequence of presentation, while filtering gives rules for admission into the set presented [,] resulting in a database that is a subset of the “shot material” database. Editing is selecting from the database and sequencing the selections…. To go further: for a filmmaker the term “cutting,” as “editing,” loses its meaning, and “sorting,” “assembling,” and “mapping” become more apt metaphors for the activity of composition. (Weinbren 2007, p. 71)

Like Manovich, Weinbren finds a redefinition in filmmaking that stems from the selection processes that the database calls for: data collection, generation, and assembly. Weinbren further breaks the selection process into sorting and filtering. With this new terminology, Weinbren makes a linguistic shift from ‘editing’ and ‘cutting,’ to ‘sorting,’ ‘assembling’ and ‘mapping.’ This linguistic shift is significant in the sense that it highlights the practice that is ‘under’ the filmmaker: databasing.

Databasing is a term I have chosen that best describes the practice of the database, that is, a term that includes the elements and actions of database practices, together with their temporality. The elements of databasing are the different data types and structures that build more complex database systems. The actions of databasing are, on the one hand, the type of operations that a database allows, and on the other, the bodily activity that occur before and after these operations. That is to say, since the operational level occurs below the perceptual threshold of the body, I consider the actions surrounding the immediacy of computations to be defining aspects of databasing.

Depending on the programming language, data types may or may not be part of a data structure, and they store different types of values such as int, float, char. These types are then interpreted in binary language by the compiler. Grouping these types into larger sets results in arrays. For example, in the C programming language, programmers ‘declare’ variables first —e.g., unsigned char age— and then ‘initialize’ them with some data —e.g., age=30. A simple variable like one’s ‘age’ needs only one value, and given that the unsigned char data type only stores values from 0-255, it is safe to use in this case: no age can be negative, no human can live longer than 255 years.

A data structure is a set of data types kept generally in contiguous slots in memory space. It is built for fast allocation and retrieval. A very simple data structure can be thought of as, for example, a person’s name together with an age (See Listing [lst:person]).

typedef struct Person {

unsigned char age;

char name[128];

} Person;At this point it is important to refer to the higher or lower levels of computer software. A software that is ‘higher’ means that its simplest operations are composed of multiple smaller operations. The user can thus ‘forget’ about certain complexities that come from low-level programs, such as memory management. In this sense, low-level programs operate ‘closer’ to hardware, and programmers need to work at a more granular level. While the above data structure contains low-level features such as setting the size of the name array, it releases the programmer from thinking binary conversion. This means that unless you are changing values directly on the memory card (which is unthinkable), there will most likely be an underpinning software layer.

The speed of regular house computers is so fast that high-level operations happen below the perceptual level (generally below 1-2 milliseconds), hence, for example, the capability for real-time audio processing at high quality sample rates. Therefore, the temporality of activity before and after potentially very large computations feels almost immediate. This means that the body continues almost as if nothing had happened besides a click, or besides the pressing of a key. The immediacy of computation is a feature, certainly, for arriving at extremely fast operations in no time (or zero-time). It is what feels like ‘magic’ around computers: ask a computer to count to a 1000, and it already has….

However, it may become a bug if we consider the computer as a tool to understand the world. As Manovich claimed, the world understood with computers is not only one that is presented in binary terms, it is one constructed upon a specific set of data structures with their set of algorithmic rules. The better and more efficient the data structure is, the better and faster the algorithm. In this light, it can be argued that software development is essentially data structure development. At every software release, the software becomes more efficient, using less or more restricted memory space, etc., affecting the scope of its functionality as well as the speed at which it runs. Glancing at the evolution of software in terms of data structure efficiency, therefore, is glancing at a constantly accelerating stream of bits. Because it is immediate, software is incorporated immediately, thus narrowing the temporal window for framing.

This is why the temporality of databasing is context-dependent. As Hansen pointed out, the world can only appear if it appears to the body (See 1.5). Data structures, therefore, are very efficient storage devices that have no relation to worlds in themselves, but that are the condition for the possibility of world creating with computers. In this way, the programmer feeds into the computer a notion of world that is then returned by the computer’s performance. In each data structure there is a result of a feedback network. One one hand, this network refers to the history of software development, in the sense that each software release is a instance of the much larger event that is software in general. On the other, the network links this history with the practice at hand for which the software is being designed. The sound of a computer music oscillator, for example, even if it were programmed today from scratch, would have embedded histories of computer software design, computer music history, etc.

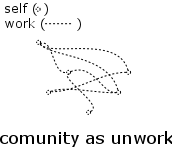

What is important to note here, is that these interrelations of what is already there in software development can be thought of as resonances colliding their way into stability; a stability that emerges not only as a ‘stable release’ of the code, but also as the condensed multiplicity of worlds that is displaced into a software package. Therefore, data structures are world-making and world-revealing devices that engage with our own capacity for virtuality, and thus they are nodes in our world-making networks.

As with other new media, the terminology used to describe computer memory is often borrowed from earlier media practices like printed text: reading, writing, and erasing. Besides terminology, computer memory shares with writing the property of hypomnesis, that is, of displacing the role of human memory with an external nonhuman device. In the case of the computer memory however, the scale of this displacement is extremely large, in terms of data storage amount and speed. The 40-bit long 4000 numbers that Von Neumann et al were aiming at for their memory ‘organ’ —which was more than plenty for the computational purposes required at the time— amounts to about 16 Kilobytes, something which might seem insignificant in comparison to current computer storage capabilities found in cloud computing. In light of this fact, we might ask ourselves how is human work transformed through interaction with these massive external memories? When designing computer software for art, the way in which data is structured, together with the speed and design of data flow, has significant effects on the temporality of art altogether as a practice both from the practicioner’s perspective and from the perceived result.

Programming decisions geared towards software production are grounded on the possibilities that the combinations of data structures allow. However, the programmer can design and change these structures, and each new design has direct effects on the way in which the user behaves around the software. For example, effective data structures will enable faster computations, and thus the user’s experience of time with the software will be different. In other words, a click of the mouse might condense a multiplicity of actions within an instant, and this condensation accelerates thought processes and bodily actions. Given the hypomnesic quality of data structures together with the infinite potential of expansion that computer memory enables, these actions of the body have the possibility to be at once analyzed and projected towards future activity. In this sense, as users, the next ‘click’ or the next movement of the body might already be suggested by the predictive analysis of a given database or by the tendency of a certain dataset. When these clicks constitute the production of an art work, then, programming decisions have an effect on the result, particularly in terms of style. In fact, much of the programming concerns in computer music software during the 1980s are grounded on a certain search for stylistic neutrality (See 3.3). Certain programming decisions are audible as some form of timbral quality of sound making software that, as listeners, we can identify a work of music as being composed in one specific software. For example, when we listen to music and recognize a certain ‘sound’ of a software, that is, the ‘sound’ of Pure Data or of Csound, it is reasonable to advance that this ‘sound’ proposes a certain aesthetic quality to the work that comes as a contingency of the programming decisions of the software itself, as well as of the use of the software. The flexibility, however, that comes with open source software extends the limits of applicability of these structures and of the aesthetic possibilities that would emerge henceforht. I comment on this effect of programming decisions in 3.2.4.0.4.

I have proposed that memory —with its storing of instructions and information— is what enables the computer as such. The simplicity of this synthesis of data and command in Von Neumann’s architecture led to its implementation in not only the mainframe computer for which he had intended, also the regular computer as we know it today. Without this architecture, computers would only be able to perform very simple arithmetic operations (like pocket calculators). That is to say, without the computer’s ability to store data (the memory organ), the partial differential equations that Von Neumann was aiming at solving would not have been possible. In these equations, the next value of the solution depends on the present value. Therefore, when iterating through every step of the solution, the function in charge of solving the equation needs to access the present value, change it, output the next value, and finally update the present value with the outputted result (See [lst:neumann]). Therefore, in order to provide such solutions, Neumann proposed that: “not only must the memory have sufficient room to store these intermediary data but there must be provision whereby these data can later be removed” (von Neumann & Burks 1946, p. 3).

present = 0

next = 0

iteration {

output = next = function() = present

present = next

}Inasmuch as the completed device will be a general-purpose computing machine it should contain certain main organs relating to arithmetic, memory-storage, control and connection with the human operator. It is intended that the machine be fully automatic in character, i.e. independent of the human operator after the computation starts (von Neumann & Burks 1946, p. 1).

Data structures are the turning point of the history of the database. Their appearance enabled the performance of automated algorithms. Within the history of computer technology, data structures begin to appear since Jon Von Neumann’s designs of the computer architecture (von Neumann & Burks 1946). Von Neumann and his team implemented Alan Turing’s original concept for a general-purpose computing machine. Of the “certain main organs,” memory-storage enables the computer’s architecture as we know it today. On one hand, the storage unit of the computer allows data to be written and erased in different locations and times. On the other, the stored data can be not only values to be used during computation, but also includes the algorithmia itself, that is, the commands —functions, operations, routines, etc.— which are used to access and process data for computation. Thus, the interaction of data and command is what defines data flow inside the computer.

Consider, for example, how curator Christiane Paul describes the database as a “computerized record-keeping system,” that is, “essentially a structured collection of data that stands in the tradition of “data containers” such as a book, a library, an archive” (Paul 2007, p. 95). However, when Paul suggests that databases are simply an instance of data collection this only points to the passivity of the container, and not to the potential that it has. An good analogy would thus be a book with the capacity to read itself, if reading were going through every letter in an orderly fashion. A database can also be understood as a library with no need for librarians because all queries are immediate; or, an archive without archeion. These considerations will be developed in the next chapter. While the more general practices of collecting and classifying data are part of the practice of databasing, on some level of the computer architecture, databasing comprises data flow within the Von Neumann architecture. This fact marks a distinction that is better seen in relation to networks. Extending computers via networks like the Internet makes databasing a global activity that expands and changes with every user. This is why I propose that databasing reconfigures the passivity of data containers such as books, libraries, and archives, with a powerful agency that resonates aesthetically.

In order to understand how databases have changed the way we think of earlier types of containers, we need to revise the differences between database models in time. By doing this, I plan to reconfigure the notion of a database system. In general, database systems have been used in businesses, namely for administration and transaction. However, narrowing database systems this way raises the similarities or differences between systems to the level of the interface. I propose to delve into the structures of the models to find how the computer itself can be thought of as a database tree, and databasing can be thought of as the activity around databases, or simply: database performance. The main purpose of the following account is to understand how computer-based sound practices have participated as a particularly resonant branch of the database tree.

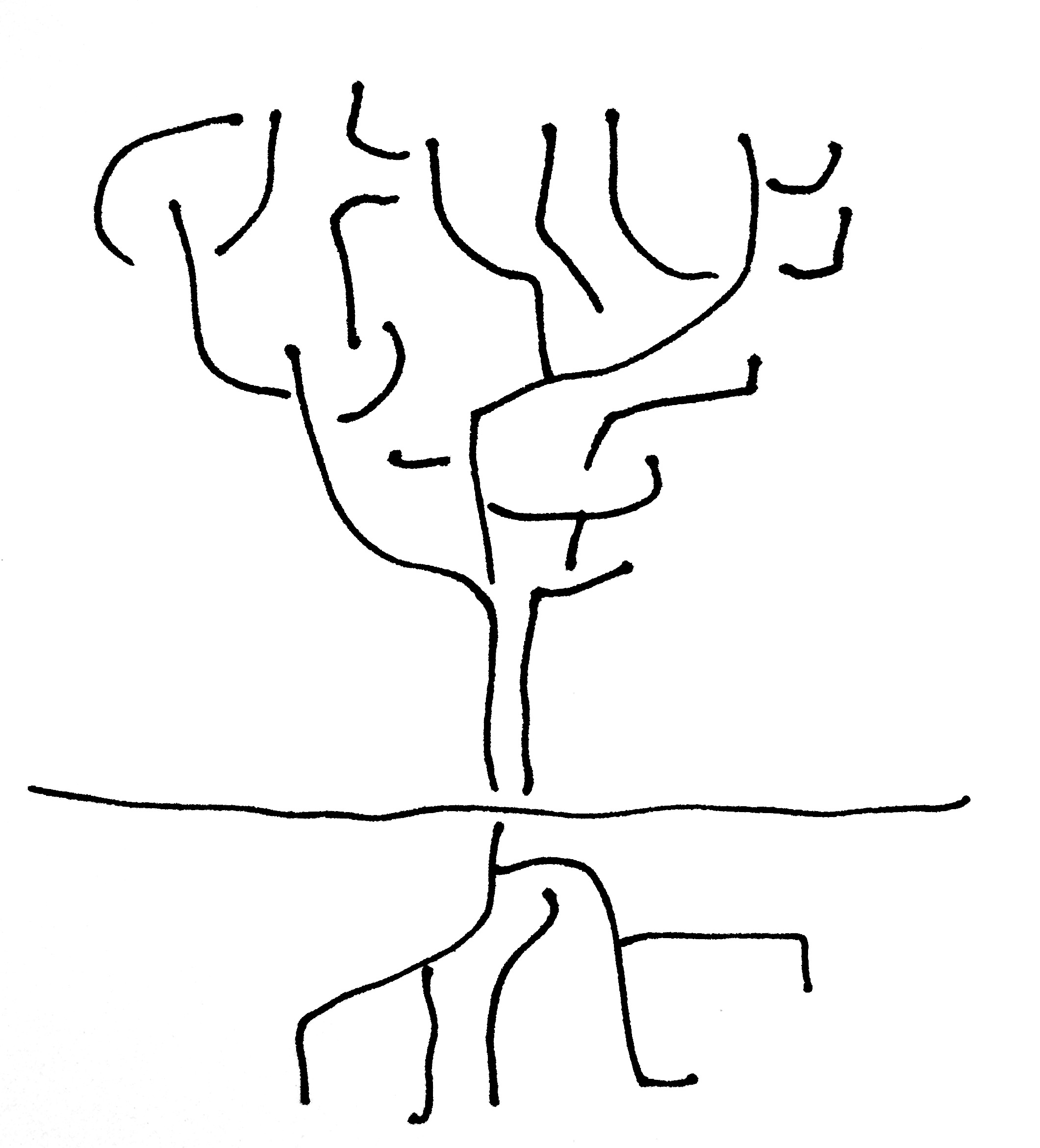

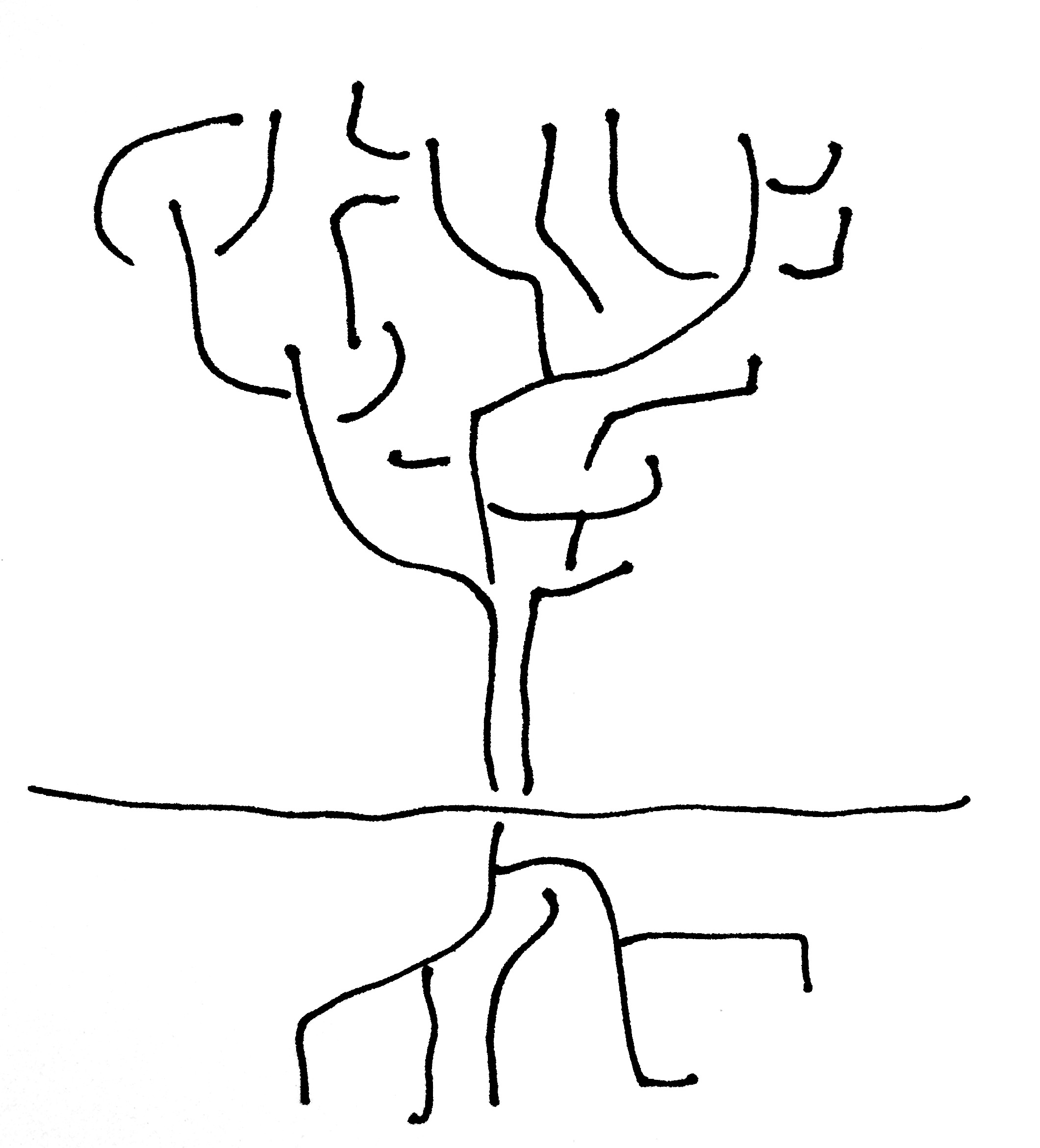

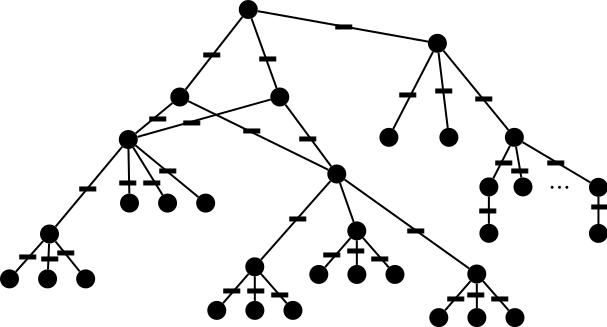

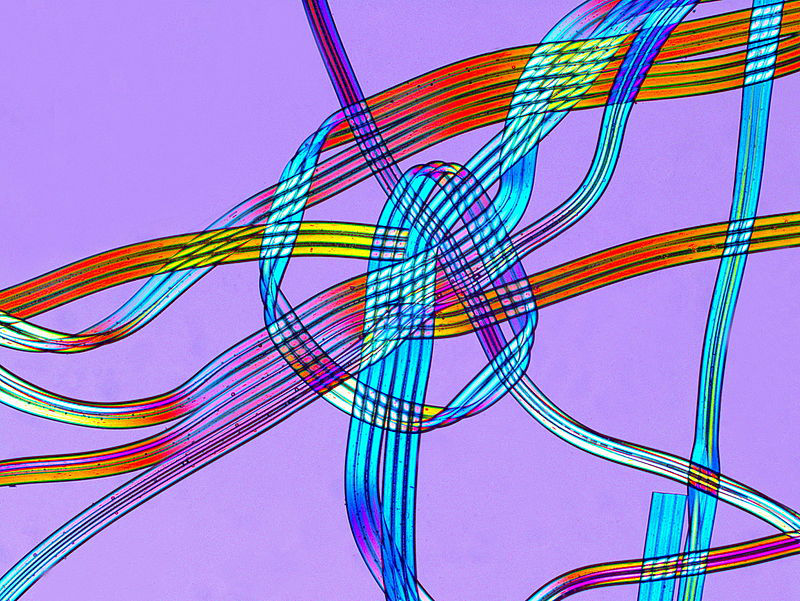

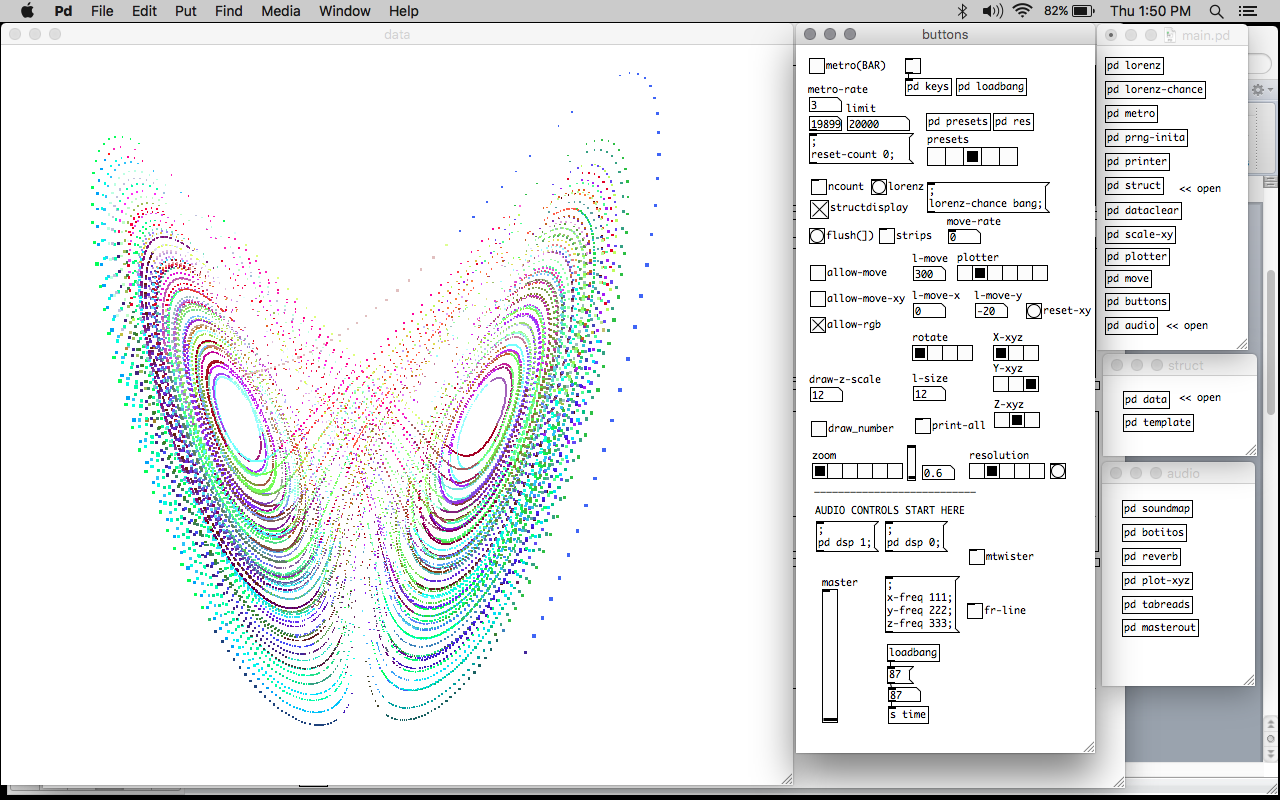

The common use of the word ‘database’ within computer science came around the 1960s, when computers became available to companies throughout the United States of America. For the purpose of data processing, software developers began designing DBMS , which are still used in great demand by multiple contemporary companies. The computer’s capability for data processing and storage is inherent in the constitution of database systems. In fields such as CAC , working with computers means being part of a system. The human operator has been regarded, for example, as a co-operator (Mathews 1963). A further approach understands humans operating with computers as another component of complex systems (Vaggione 2001). In this section, I describe the different levels of database systems as a tree (See Figure 2.1), starting from basic data structures to more elaborate database systems, and then present a brief history of how databases were designed.

A very simple sketch of a tree representing the database tree of computer evolution

The tree is built on different interpretations of the Von Neumann architecture. That is to say, while this architecture went through several optimizations over the years, its three central aspects remained. Therefore, despite the fact that different industry standards for hardware construction resulted in different kinds of operating systems, the core elements of the architecture remained the same: memory (for data and program/code), central processing unit, and input/output interfaces.

The below-the-soil level is accessed through machine and assembly code, which constitutes the core of low-level programming languages and are, to a certain extent, humanly un-readable: the world of bits. Above the soil, readability by humans is the main feature.

The database tree metaphor relates to the concept of portability. The database tree only takes the form of a tree once it is instantiated as a software and it is run. That is to say, the database tree unfolds every time it is opened, and in this unfolding it emerges the possibility of dynamically adapting to different soils. This is what is known in the programming world as defining conditions or macros. With these definitions, their programs can compile with different compilers, across a variety of hardwares and operating systems. Therefore, these database trees have as their main feature the capacity to unfold their roots in different directions upon demand.

The trunk of the tree is composed of data types and structures that provide flow between stored (underground) data and the above-ground components. Programming languages handle data types differently, but in essence, data types and structures are usually built in layers going from the lowest (close to roots) to highest levels.

These language layers, after they reach a certain level of complexity, begin to form boughs or limbs that, while being separated from each other, are linked to the same trunk and roots. I consider branches to be programs with text-based interfaces such as Bash, C, C++, python, Java, etc. Their feature is their generic functionality.

More complex programs built on top of branches, such as Pure Data, Supercollider, R, octave, Processing, OpenFrameworks etc., are dedicated for a narrower scope of tasks. Their feature is their level of specialization for the task at hand: sound synthesis, statistics, visuals, etc. They might be more application-specific. In general, these programs are commonly considered layers on top of other languages, libraries, or software frameworks.

User interfaces (or GUIs) are the leaves of the tree. I relate the photosynthetic quality of leaves with user input/output interaction. Despite their simple, user-friendly appearance, software leaves are highly complex systems such as multimedia editors (Adobe Creative Suite or Microsoft Office), Internet browsers, mobile apps, etc. A particular kind of leave is the DBMS , generally used in businesses for data processing and editing, for example: MYSQL , POSTGRESQL , NOSQL , COUCHDB and MONGODB .

An important feature of database trees is their network capabilities. Networks can be established by connecting leaves, branches, or roots with each other, both within the same tree and with other trees. For example, software can establish a network between its graphical interface and its core program —as is the case with Pure Data, for example. Another example would be the way in which DBMS s interact with data: the MYSQL database model allows the user to load a data set in working memory, and establishes a connection between the opened memory and the input/output mechanisms. Networks of trees are data streams running by way of an IP and a client-server type of relation. Cloud storage services such as Google Drive, ICloud, OneDrive, and Dropbox are used as a networked way to store and share data. One tree can serve as data storage and processing repository, and other client trees can connect to the server tree and request data or processing of data from it. This is the essence of the internet and all the communication services that it enables, such as email services, social networking sites, and multi-user collaboration platforms like Github. This allows software like Pure Data and MySQL to have their respective core program and data sets in one computer, and their interfaces on a different one.

Combining networked databases with computer clusters forms what is known as cloud computing. For example, most universities provide clusters for data processing —e.g., NYU’s Prince cluster— that can be accessed from remote locations. These clusters are massive server architectures made out of multiple processing and memory units joined together. These architectures began developing in the 1990s, coining terms like data mining (Kamde & Algur 2011), data warehouses, data repositories (Silberschatz et al. 1995).

Data structures are the building blocks upon which the entire database model is designed. A data structure is a way to organize data so that a set of element operations are possible, such as ADD, REMOVE, GET, SET, FIND, etc. Data structures can be thought of in two ways: either implemented or as interfaces, what is also known as abstract data types:

An interface tells us nothing about how the data structure implements these operations; it only provides a list of supported operations along with specifications about what types of arguments each operation accepts and the value returned by each operation. (Morin 2019, p. 18)

In other words, the abstract data type represents the idea of the structure. When abstract data types are implemented in code, the speed and efficiency of the data structure can be physically evaluated. An implementation of this sort includes “the internal representation of the data structure as well as the definitions of the algorithms that implement the operations supported by the data structure” (Morin 2019, p. 18). Because of the consequences that design has on computational performance, data structures have constituted a focal research point in the database and computer science communities.

Arrays constitute one of the oldest and most basic data structures. They are contiguously stored, same-type data elements referenced to by indices. Most programming languages have implemented arrays. Most real-time software loads sound files or images to working memory as an array (or a buffer) of contiguous samples or pixels. Arrays are use less resources when reading than when writing, since accessing their elements is achieved by pointers, but editing demands copying large portions of the array back and forth.

One important technical shift in the use of data structures came with the concept of linked lists. A linked list is collection of data (usually a symbol table), with pointers to the ‘previous’ and/or ‘next’ item on the list. They are built to maintain an ordered sequence of elements. This functionality was only available after the FORTRAN ’77 programming language (1977) and later it became integrated in the C programming language (Kernighan 1978). They differ from arrays since they can hold multiple data types (including arrays and other data structures), and they are accessed by traversing the list using the ‘previous’ and ‘next’ pointers. In the programs developed during the SSSP and CAMP years (See 3.3.1), linked lists were used in the (then) very recent C programming language. Ames (1985) as well as Rowe (1992) used linked lists, the former to represent melodies within an automated composition system, the latter within the Event data structures of the interactive music system Cypher.

Crowley (1998) claims, however, that neither linked lists nor arrays are suitable for large text sequences, since linked lists take up too much memory, and arrays are slow because they require too much data movement. Nonetheless, he argues, “they provide useful base cases on which to build more complex sequence data structures” (Crowley 1998). In fact, data structures are generally built from arrays and linked lists. For example, in designing Audacity, Mazzoni & Dannenberg (2001) implemented the concept of sequences, into a set of small arrays whose pointers were traversed in a linked list. Large audio files were loaded and edited at very fast processing times.

I propose now to extend the concept of abstract data types to the concept of database models. Database models are the realm of data structures. These models, to be described below, constitute the abstract ways in which data can be organized within a database system. DBMS s, in turn, are a specific type of software aimed at organizations, website design, server architectures, company management, among other uses in the business sector. Since an analysis of these systems falls outside the scope of this study, I provide a glimpse of the structure of the models without entering into their implementation. Figure [tab:dbmodels] shows a development timeline that serves as a context for the appearance of these models. Their emergence over the years goes hand in hand with hardware and programming language development. Further, several implementations of these models depended on specific language development such as DDL for structural specification of data, and a DML for accessing and updating data (Abiteboul et al. 1995, p. 4).

Angles & Gutierrez (2008) name the three most important aspects a database model should address: “a set of data structure types, a set of operators or inference rules, and a set of integrity rules” (Angles & Gutierrez 2008, p. 2). Operators can be understood as the set of routines that constitute the query language and data manipulation. Integrity rules can be understood as data constraints preventing redundancy or inconsistencies, and checking routines preventing false queries. In a similar way, for Abiteboul et al. (1995) a database model “provides the means for specifying particular data structures, for constraining the data sets associated with these structures, and for manipulating the data” (Abiteboul et al. 1995, p. 28). However, data manipulation (operators) and constraints (integrity) are built around the data structure, which is why, Angles & Gutierrez (2008) continue, “several proposals for [database] models only define the data structures, sometimes omitting operators and/or integrity rules” (Angles & Gutierrez 2008, p. 2).

In essence, all DBMS s share the same function: provide access to a database. This access, however, is restricted by the imperatives of the model. Database models have been thought of as collections of conceptual tools to represent real-world entities and their relationships (Angles & Gutierrez 2008, p. 1). In this sense, the models are fit to achieve a level of specificity and efficiency that is integrated with the notions of economic success. That is to say, the quality of database access has a direct influence on the operational level of businesses. For example, if the database system in charge of airline reservations fails to update an entry or does not restrict duplicates, this might result in either empty airplanes or double-booking, an economic loss that might result in a company going out of business. In relation to data structure design within CAAC software, Ariza (2005a) claims that design choices determine “the interaction of software components and the nature of internal system processing” (Ariza 2005a, p. 18). Luckily, a failed database access in music might perhaps come as a minimal performative ‘bump’ that can be otherwise forgotten. However, it is imperative that these models are analyzed because of the continuum between data structures and database models, and because of the internal relations that resonate from these structures to the implementations of computer music software. Therefore, to a certain extent, database models and computer music software share the resonance of data structures, and belong to their realm.

Diagram of the hierarchical model

The hierarchical model was developed at IBM during the early 1960s, in conjunction with other American manufacturing conglomerates for NASA ’s Project Apollo, resulting in IMS (Long et al. 2000). The hierarchical model is closely linked to the architecture of data within a computer. Therefore, it interprets records as collections of single-value fields that are interconnected by way of paths. Records can have type definitions, which determine the fields it contains. As a rule of this structure, a child record can be linked upwards to only one parent record and downwards to many child records. The structure stems from a single ‘root’ record, which is the initial parent-less record through which all other records are accessed.